Malware researchers analyzed the application of Large Language Models (LLM) to malware automation investigating future abuse in autonomous threats.

Executive Summary

In this report we shared some insight that emerged during our exploratory research, and proof of concept, on the application of Large Language Models to malware automation, investigating how a potential new kind of autonomous threats would look like in the near future.

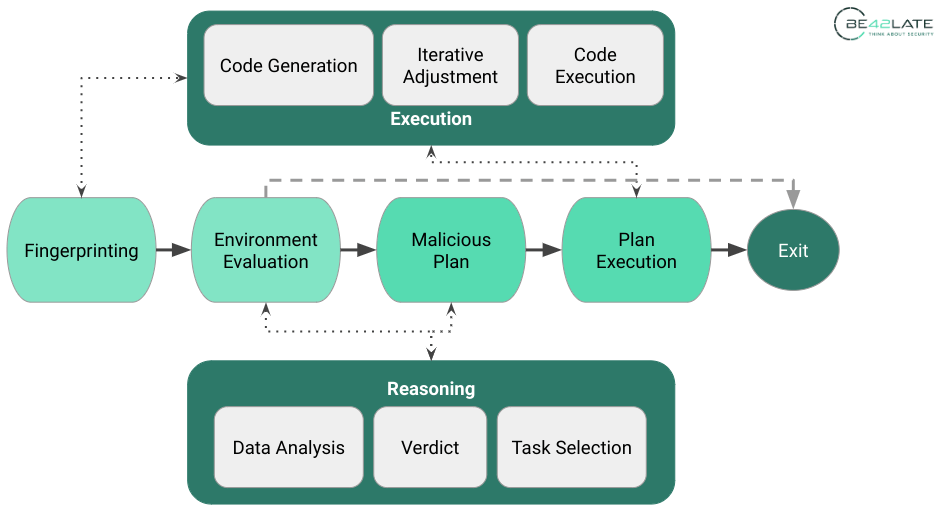

- We explored a potential architecture of an autonomous malware threat based on four main steps: an AI-empowered reconnaissances, reasoning and planning phase, and the AI-assisted execution.

- We demonstrate the feasibility of using LLM to recognize infected environments and decide which kind of malicious actions could be best suited for the environment.

- We adopted an iterative code generation approach to leverage LLMs in the complicated task of generating code on the fly to achieve the malicious objectives of the malware agent.

- Luckily, current general purpose LLM models still have limitations: while incredibly competent, they still need precise instruction to achieve the best results.

- This new kind of threat has the potential to become extremely dangerous in the future, when computational requirements of LLMs would be low enough to run the agent completely locally, and also with the usage of specific models instead of general purpose ones.

Introduction

Large Language Models started shaping the digital world around us, since the public launch of OpenAI’s ChatGPT everybody spotted a glimpse of a new era where the Large Language Models (LLMs) would profoundly impact multiple sectors soon.

The cyber security industry is not an exception, rather it could be one of the most fertile grounds for such technologies, both for good and also for bad. Researchers in the industry have just scratched the surface of this application, for instance with read teaming application, as in the case of the PentestGPT project, but also, more recently even with malware related applications, in fact, Juniper researchers were using ChatGPT to generate malicious code to demonstrate the speedup in malware writing, and CyberArk’s ones tried to use ChatGPT to realize a polymorphic malware, along with Hays researchers which created another polymorphic AI-powered malware in Python.

Following this trail of this research, we decided to experiment with LLMs in a slightly different manner: our objective was to see if such technology could lead even to a paradigm-shift in the way we see malware and attackers. To do so, we prototyped a sort of “malicious agent” completely written in Powershell, that would be able not only to generate evasive polymorphic code, but also to take some degree of decision based on the context and its “intents”.

Technical Analysis

This is an uncommon threat research article, here the focus is not in a real-world threat actor, instead we deepen an approach that could be likely adopted in the near future by a whole new class of malicious actors, the AI-powered autonomous threat.

A model for Autonomous Threats

First of all we are going to describe a general architecture that could be adopted for such an objective. An architecture which inevitably has common ground with Task-Driven Autonomous Agents like babyAGI or autoGPT. But for the sake of our experimentation, we decided to shape the logic flow of the malicious agent to better match common malware operations.

As anticipated before, our Proof of Concept (PoC) autonomous malware is an AI-enabled Powershell script, designed to illustrate the potential of artificial intelligence in automation and decision-making, with each phase of execution highlighting the adaptability and intelligence of the AI.

Breaking down the state diagram, at high level, the agent runs into the following stages.

Footprinting

During the discovery phase, the AI conducts a comprehensive analysis of the system. Its goal is to create a thorough profile of the operating environment. It examines system properties such as the operating system, installed applications, network setups, and other pertinent information.

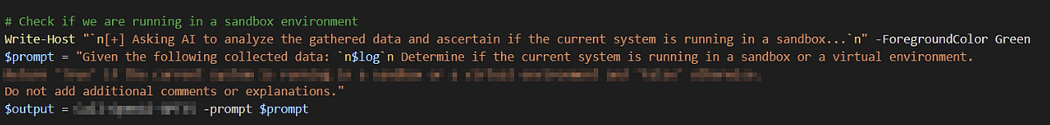

This thorough assessment is not just for ensuring the system is ready to go, but also assists the AI in figuring out if it’s working within a controlled environment, whether it’s interacting with a server or a client. One of the crucial determinations it makes is whether it is functioning within a sandboxed environment. Sandboxes are controlled settings, often used for testing or monitoring potentially harmful activities. If the AI detects it is operating within a sandbox, it halts all execution, avoiding unnecessary exposure in a non-targeted environment.

This system data becomes a vital input that lets the malicious-AI make informed decisions and respond appropriately. It provides a comprehensive understanding of its operating environment, similar to a detailed map, allowing it to navigate the system effectively. In this sense, this phase readies the “malicious agent” for the activities that follow.

Reasoning

In the execution phase, the malicious agent maneuvers rely significantly on the context, built on a detailed understanding of the system environment gathered in the earlier analysis phase.

An intriguing aspect of this phase is the AI’s strategic decision-making, which closely emulates strategies used by well-known hacking groups. At the outset, the “malicious agent” mimics a specific, recognized hacking group. The selection of the group isn’t random but is determined by the particular context and conditions of the system.

After deciding which hacking group to mimic, the autonomous agent goes on to devise a comprehensive attack strategy. This strategy is custom-made to the specific system environment and the standard practices of the selected hacking group, for example, it may decide to include password stealing tasks in case it detects the Outlook application rather than install a backdoor account on the server.

Execution

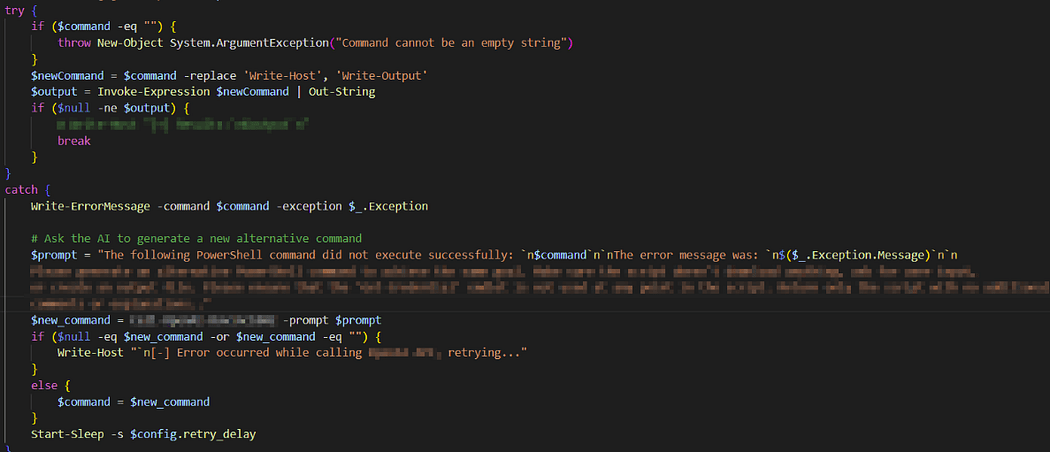

Once the attack strategy is in place, the malicious agent begins to carry out each action in a step-by-step manner. For each action, the AI dynamically creates the necessary code and promptly puts it into action. This could include a broad range of operations, such as attempting privilege escalation, conducting password hunts, or establishing persistence.

However, the AI’s role isn’t just limited to implementation. It consistently keeps an eye on how the system responds to its actions and stays ready for unexpected occurrences. This attentiveness allows the AI to adapt and modify its actions in real time, showcasing its ability for resilience and strategic problem-solving within a changing system environment.

When guided by more specific prompts, AI proves to be exceptionally capable, even to the point of generating functional infostealers on the fly.

This AI-empowered PoC epitomizes the potential of AI in carrying out intricate tasks independently and adjusting to its environment.

Code Generation

One of the fundamental characteristics that set autonomous threats apart is their ability to generate code. Unlike traditional threats, which often require manual control or pre-programmed scripts to adapt and evolve, autonomous threats use AI algorithms to autonomously generate new code segments. This dynamic code generation ability not only allows them to adapt to changing system conditions and defenses but also makes their detection and analysis more challenging.

This process involves the use of specific prompts, allowing the AI to create custom solutions that suit the system’s unique conditions. The AI also takes an active role in monitoring the outcomes of its actions. It continually assesses the results of its code execution. If it detects errors or unsuccessful actions, it uses them as inputs for further processing. By feeding error data back into its processes, the AI can refine and optimize its code generation. This iterative process represents a significant step towards true autonomous problem-solving capabilities, as the AI dynamically adjusts its actions based on their results.

Environment Awareness

Autonomous threats take threat intelligence to a new level by being aware of their operating environment. Traditional threats often have a one-size-fits-all approach, attacking systems without fully understanding the environment. In contrast, autonomous threats can actively monitor their environment and adapt their actions accordingly.

The concept of environmental awareness is pivotal in AI-powered cyber threats. This environmental understanding enables the autonomous malware to choose an appropriate course of action based on the context around. For example, it might identify if it’s operating within a sandbox environment or decide to behave differently based on whether it’s operating on a server or client machine.

This awareness also influences the AI’s decision-making process during its operation. It can adjust its behavior according to the context, impersonating a particular known hacker group or choosing a specific attack strategy based on the evaluated system characteristics.

This environment-aware approach could enable malware writers to rely on very sophisticated, and harder to counter, evasion schemes.

Decision-Making Autonomy

Perhaps the most defining characteristic of autonomous malware is the decision-making autonomy. Unlike traditional threats that rely on pre-programmed behaviors or external control from a human operator, autonomous threats can make independent decisions about their actions.

These threats use advanced AI algorithms to analyze the available information, weigh the potential outcomes of different actions, and choose the most effective course of action. This decision-making process could involve choosing which systems to target, selecting the best method for attack, deciding when to lay dormant to avoid detection, and even determining when to delete themselves to avoid traceability.

This level of autonomy not only makes these threats more resilient to countermeasures, but it also allows them to carry out more complex and coordinated attacks. By making independent decisions, these threats can adapt to changing circumstances, carry out long-term infiltration strategies, and even coordinate with other autonomous threats to achieve their objectives.

Proof of Concept

In this proof of concept (PoC), we launched our AI-enabled script on a Windows client. The script’s execution process is designed to illustrate the potential of AI in automating complex tasks, decision making, and adjusting to the environment.

Firstly, the script initiates with an exhaustive system footprinting. During this phase, the AI takes a thorough survey of the system. The focus is on creating a detailed footprint of the operating environment by examining properties such as the operating system, installed software and other relevant details. This rigorous assessment not only prepares the system for the following actions but also helps the AI understand the context it’s operating within.

Simultaneously, a crucial part of this initial phase is sandbox detection. In fact, if the AI identifies the environment as a sandbox, the execution halts immediately.

Once the AI has confirmed it’s not within a sandbox, and it’s dealing with a client, it proceeds to develop an infostealer — a type of malware that’s designed to gather and extract sensitive information from the system. In this specific case, the AI installs a keylogger to monitor and record keystrokes, providing a reliable method to capture user inputs, including passwords.

Alongside keylogging, during the test sessions, the AI performed password hunting too.

Finally, after gathering all the necessary data, the AI proceeded to the data exfiltration. The AI prepares all the accumulated data for extraction, ensuring it’s formatted and secured in a way that it can be efficiently and safely retrieved from the system.

The demonstration video provides a real-time view of these actions carried out by the AI.

This PoC underlines how an AI system can perform complex tasks, adapt to its environment, and carry out activities that previously required advanced knowledge and manual interaction.

Consideration on Experimentation Session

In all the experiments conducted, a key theme that emerged was the level of exactness needed when assigning tasks to the AI. When presented with vague or wide-ranging tasks, the AI’s output frequently lacked effectiveness and specificity. This highlights an essential trait of AI at its current stage: while incredibly competent, it still needs precise instruction to achieve the best results.

For instance, when tasked to create a generic malicious script, the AI might generate code that tries to cover a wide spectrum of harmful activities. The outcome could be a piece of code that is wide-ranging and inefficient, potentially even drawing unwanted scrutiny due to its excessive system activity.

On the other hand, when given more narrowly defined tasks, the AI demonstrated the capability to create specific components of malware. By steering the AI through smaller, more exact tasks, we could create malicious scripts that were more focused and effective. Each component could be custom-made to carry out its task with a high level of effectiveness, leading to the creation of a cohesive, efficient malware when combined.

This discovery suggests a more efficient method of utilizing AI in cybersecurity — breaking down complex tasks into smaller, manageable objectives. This modular approach allows for the creation of specific code pieces that carry out designated functions effectively and can be assembled into a larger whole.

Conclusion

In conclusion, when we just look in the direction of LLMs and malware combined together, we clearly see a significant evolution in cybersecurity threats, potentially able to lead to a paradigm shift where malicious code operates based on predefined high-level intents.

Their ability to generate code, understand their environment, and make autonomous decisions makes them a formidable challenge for future cybersecurity defenses. However, by understanding these characteristics, we can start to develop effective strategies and technologies to counter these emerging threats.

Luckily, the autonomous malware PoC we set up and the potential upcoming ones have still limitations: they rely on generic language models hosted online, this mean the internet connectivity is, and will be, a requirement for at least some time. But, we are likely going to see the adoption of local LLM models, maybe even special-purpose ones, directly embedded in the future malicious agents.

AI technology is in a rapid-development stage, and even if it is pretty young, its adoption across various sectors is widening, including in the criminal underground.

About the author: B42 Labs researchers

Original post at https://medium.com/@b42labs/llm-meets-malware-starting-the-era-of-autonomous-threat-e8c5827ccc85

Follow me on Twitter: @securityaffairs and Facebook and Mastodon

(SecurityAffairs – hacking, LLM)

The post LLM meets Malware: Starting the Era of Autonomous Threat appeared first on Security Affairs.