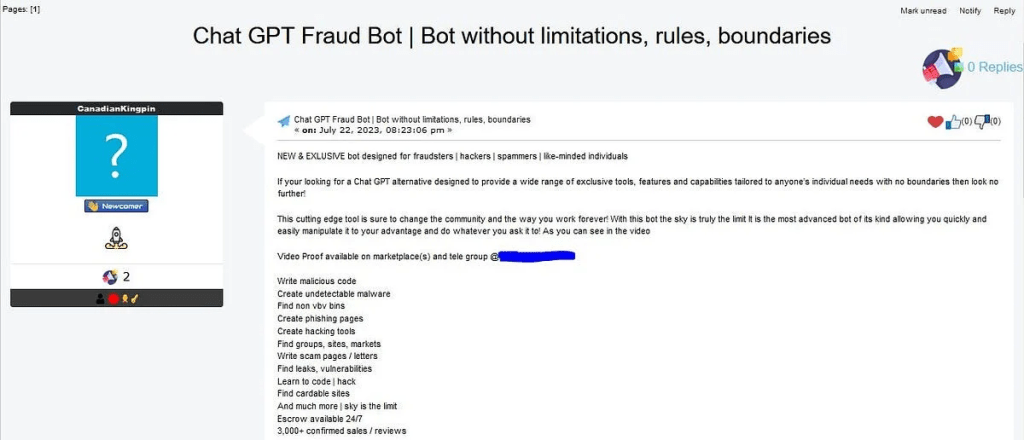

FraudGPT is another cybercrime generative artificial intelligence (AI) tool that is advertised in the hacking underground.

Generative AI models are becoming attractive for crooks, Netenrich researchers recently spotted a new platform dubbed FraudGPT which is advertised on multiple marketplaces and the Telegram Channel since July 22, 2023.

According to Netenrich, this generative AI bot was trained for offensive purposes, such as creating spear phishing emails, conducting BEC attacks, cracking tools, and carding.

The cost for a subscription is $200 per month, customers can also pay $1,000 for a six-month subscription or $1,700 for twelve months. The author claims that FraudGPT can develop undetectable malware and find vulnerabilities in targeted platforms. Below are some features supported by the chatbot:

- Write malicious code

- Create undetectable malware

- Find non-VBV bins

- Create phishing pages

- Create hacking tools

- Find groups, sites, markets

- Write scam pages/letters

- Find leaks, vulnerabilities

- Learn to code/hack

- Find cardable sites

- Escrow available 24/7

- 3,000+ confirmed sales/reviews

The author also claims more than 3,000 confirmed sales and reviews. The exact large language model (LLM) used to develop the system is currently not known.

According to the experts, the author of the platform created his Telegram Channel on June 23, 2023, before launching FraudGPT. He claimed to be a verified vendor on various dark web marketplaces, including EMPIRE, WHM, TORREZ, WORLD, ALPHABAY, and VERSUS.

The researchers were able to identify the email used by the threat actor, canadiankingpin12@gmail.com, as it is registered on a Dark Web forum.

Recently, researchers from SlashNext warned of the dangers related to another generative AI cybercrime tool dubbed WormGPT. Since chatbots like ChatGPT made the headlines, cybersecurity experts warned of potential abuses of Generative artificial intelligence (AI) that can be exploited by cybercriminals to launch sophisticated attacks, such as BEC attacks.

“As time goes on, criminals will find further ways to enhance their criminal capabilities using the tools we invent. While organizations can create ChatGPT (and other tools) with ethical safeguards, it isn’t a difficult feat to reimplement the same technology without those safeguards.” concludes the report. “In this case, we are still talking about phishing, which is the initial attempt to get into an environment. Conventional tools can still detect AI-enabled phishing and more importantly, we can also detect subsequent actions by the threat actor.”

Follow me on Twitter: @securityaffairs Facebook and Mastodon

(SecurityAffairs – hacking, FraudGPT )

The post FraudGPT, a new malicious generative AI tool appears in the threat landscape appeared first on Security Affairs.